Have you ever felt like you were being tracked by someone or something while navigating through the internet? Well, you are. But don't panic. I'm going to talk about some positive aspects of these tracking practices that might shine some light on what the future beholds to these user activities tracking practices on the internet. But you be the judge.

First, let's talk about the tool behind this: Natural Language Processing. What is Natural Language Processing, you say? Well, I'm glad you asked. It is a subdiscipline of Artificial intelligence that attempts to get computers to perform useful tasks involving human language, tasks like enabling human-machine communication, improving human-human communication, or simply doing useful processing of text or speech (Jurafsky & Martin, 2008). This means that Natural Language Processing is a multidisciplinary field that mixes linguistics and computer engineering to process written or spoken natural languages (English, Spanish, Chinese, etc.).

Where can you see examples of this field? Well, every day you will see it more and more clearly, and I hope that after reading this article you will get to understand the essence behind it from a nice and general overview. Examples of this field can be seen on smart speakers and phones virtual assistants which you can speak to, and they will "understand you". You can see it on grammar check services online or in text editors too, and translation services.

What is the main issue when working with NLP? When working with language processing, it is important to understand the main issue of analyzing natural language, which is that said languages are ambiguous. This means that the same word under different contexts can mean different things, and that some sentences are not always accurate when expressing an idea or a request. But, as these sentences are often used, they make their way into everyday life, and we all understand them despite them meaning something different than what intended if analyzed properly. For example:

Example 1: 'Mr. Smith was found guilty of keeping a protected animal in the Atherton Magistrates Court after being charged with removing a scrub python from a resident's property.

- Problem: This sentence is ambiguous because it is unclear whether Mr. Smith was guilty of keeping the snake in the Magistrates Court, or guilty of keeping the snake after he caught it from a neighbor’s property.

Corrected: In the Atherton Magistrates Court, Mr. Smith was found guilty of keeping a protected animal, a scrub python, after removing it from a resident's property.’ (Taken from University of Newcastle, Library guides)

Example 2: Call me a taxi, please.

- Problem: This sentence is ambiguous because it is not clear if the person is asking to be called "a taxi", if he wants somebody to call a taxi (vehicle) for him, or if he wants somebody to call a taxi company to get taxi service.

- Corrected: Call a taxi company number and ask them to get a taxi driver to come pick me up, please.

In both of these sentences, we as humans (or at least most of us) instantly get the meaning of the sentence by itself without the need for specification or reformulation of it. But it is not the same case with computers, they need to take the analysis to a whole other level to get the true meaning of what the human intended to say, and it is always a guess. A computer will try to get as close to this true meaning as possible, but well, ain't it what we all do when communicating?

How can we remove this ambiguity? By CLASSIFYING words. We got names, adjectives, adverbs, prepositions, conjunctions, and many more grammar classifications. But more than that, words have topics, they fit into a context that makes them make sense to us. So, the main job that we need to do with our language processing algorithm is to classify words and have them stored so that when we stumble into a new text with new words, our computers can understand these new words.

- So, what is going on behind the scenes? How does this work? There are many ways of implementing classification algorithms. Most of them involve plenty of probabilistic mathematics and analysis, which thankfully are more often than not already standardized and included in some programming languages libraries for users to use with little concern for what goes on underneath the code lines. (Scikit-Learn' on Python, for example)

I'm going to describe a common process that involves the use of three main components:

- Corpus

- Word Embeddings

- Probability equations

Corpus It is a collection of linguistic material of real examples of language use. It takes the form of a collection of documents with some desired characteristics like whether it is written or spoken, the language used, the type of text (scientific, news, literary, etc.), theme (sports, art, computer science, etc.), with or without tags, etc. This corpus is divided in two: The Training Corpus and the Test Corpus. The proportions are usually chosen to use as much training corpus as possible (80% training corpus, 20% test corpus). It is from the training corpus where we build the model that we will use to classify words. In this example, we are going to choose Word Embeddings to build our model.

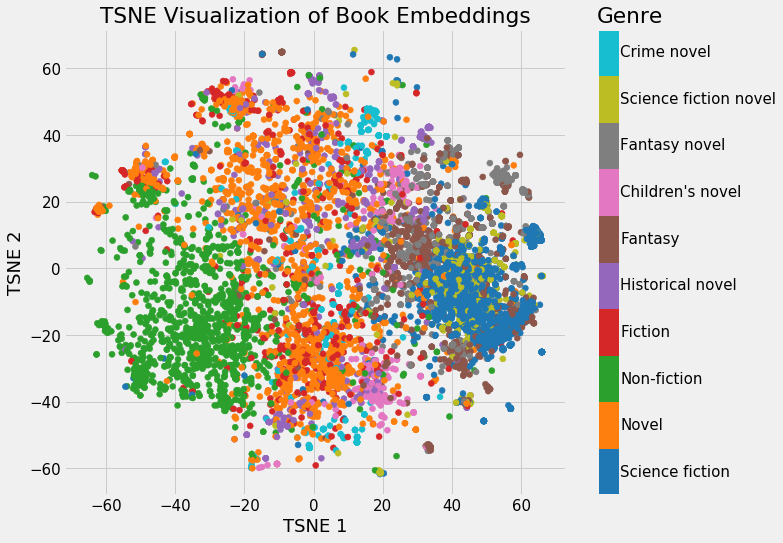

Word Embeddings This practice proposes displaying the meaning of words with vectors. The way this is done is by mapping thousands of words into a vector of n real numbers. This implementation does not require the corpus to have tags, which is great as many corpuses don't. Vectors for representing words are generally called embeddings, because the word is embedded in a particular vector space, where similar words end up "nearby" in this vector space. I don't want to get too deep into this concept as it is quite heavy and gets away from the main topic of this article. For more information about word embeddings, you can check neural networks, which are quite popular, and use this method.

Embedding of all 37,000 books on Wikipedia, colored by genre. (From Jupyter Notebook on GitHub).

- Probability equations As mentioned in the previous item, it is not my intention to get too deep into technical stuff, so let's take a nice look at what happens in the mathematical aspect of the algorithm, from a distance. Now it's time to classify. We have our words nicely vectorized in a vector space where their separation indicates how similar they are/ So, we need to create our classifying function. Typically, neural net language models are constructed and trained as probabilistic classifiers that learn to predict a probability distribution: P(wt|wt-k,...,wt-1) ∀ t∈V. What this equation does is calculate the probability of a word (wt) being of a certain classification (we classify between two possible classifications. For example, funny/not funny, violent/not violent, sports/not sports, etc.) given a certain context, which in this case are the previous words to the one being calculated in the corpus being analyzed. Once that equation is implemented, we have our classifier going, and we're ready to process some natural language.

How is this useful? As a way of introducing the potential of natural language processing, I am going to talk about an example taken from reality. Let's say that you are the mayor of a city, and people are complaining a lot about garbage disposal management in said city. So, you decide you want to do something about it. The problem? People don't like formally complaining through official resources provided by your city government, as they think that it takes too long or they don't even trust them. But, you realize that there are many complaints on Twitter and feel like it could be a potential there for you to exploit and get your hands on these complaints on time. So, you decide to use what you learned in this article and get the bull by the horns in this situation. The first thing you do is get a hold of the official API provided by Twitter to get you a set of Tweets that will be your work set. To do this, you use the provided filter and choose some keywords to get your desired set: Your city name, words like garbage, disposal, management, your name, etc. Once that is done, you find yourself with a nice huge set of tweets. So, what now? Your ultimate goal is to identify relevant events (garbage disposal problems in the city, where you should take a team to so they can address it properly, which we will call EVENTS), but to do this you could help the process by filtering the tweet set even more. To do this, you follow the following steps, using five modules on your program:

Geo Reference Module: Provided by your favorite maps API, it checks whether the tweets are from your city or not.

Image Processing Module: Provided by your favorite image processing API (maybe Google's Cloud Vision API), if a tweet contains an image, it analyzes it and checks whether it has something visual related to garbage disposal.

Complaint Classifier Module: You use what you learned in this article here. For a corpus, you may use your formal complaint service where you know that the texts provided there are complaints, and you may also find some other useful corpus on the internet.

Garbage Disposal Classifier Module: Once again, you are capable of creating this module thanks to this awesome article.

Complaint Classifier Module: Once the previous four modules run, you are left with a nice filtered set where you can identify relevant events with more ease. You can classify them into different classes: 1) Very Useful: Refers to garbage disposal events in your city and contains a related image (EVENT). 2) Useful: Refers to garbage disposal events in your city and contains location. 3) Somewhat Useful: Refers to garbage disposal events in your city, without a location. 4) Not Useful: Does not refer to garbage disposal events in your city.

Lastly, you can show these events on a map and set your teams to work on them as soon as possible. Meanwhile, your algorithm is still working and ready to get some new events for you.

So, we have seen how useful these practices can be, and with just a small example, this can be taken to other complexity levels and make a lot out of it. But, what do you think? Is this ethical? Are you ok with "people" (machines) analyzing your data? Can this be used for evil purposes? Are governments or institutions doing the right thing with it? Whatever the case is, now you are aware of it and can go spill your knowledge to your friends and family while looking like a pro.